Ensuring that AI systems align with human values, ethics, and policies is increasingly important. This is especially true for enterprises that integrate AI into their operations, which is why we at Snorkel AI have been particularly interested in AI alignment lately.

I recently co-presented a webinar with Snorkel AI Senior Research Scientist Tom Walshe on how companies can use enterprise alignment to build better, safer, more helpful generative AI systems. You can watch the webinar and extracts from it below, but I have summarized the main points of my portion of our presentation here.

What is AI alignment?

Before diving into enterprise specifics, let’s define what we mean by alignment in general. AI alignment ensures that AI systems operate in a manner consistent with human and societal values, ideals, and instructions. This includes:

- Taking and following human instructions.

- Sharing the same goals as humans.

- Behaving according to human values and ethical standards.

- Avoiding harmful behavior and promoting beneficial actions.

The Triple H Definition

A simple yet effective guideline we often use is the “Triple H” definition: We want AI systems to be helpful, honest, and harmless. For example, aligned AI systems should refuse to produce toxic behavior or biased outcomes.

What is enterprise alignment?

At its core, enterprise alignment takes the broad notion of AI alignment—ensuring AI systems are safe and compliant with human policies—and applies it within organizational or business contexts. It means ensuring that AI systems function consistently with company goals, industry-specific ethical standards, and regulatory requirements. This applies to both internal applications (those that aid employees) and external, client-facing applications.

In an enterprise setting, a large language model-powered system should refuse a non-compliant employee request. This involves specifying acceptable and unacceptable requests via company policies and ensuring the AI system both recognizes and adheres to these policies.

Challenges in enterprise alignment

At Snorkel AI, we have seen enterprises struggle with alignment due to two major factors:

- Dynamic enterprise requirements.

- The data bottleneck.

These challenges present differently in enterprise settings than they do in general alignment.

Dynamic requirements

Enterprise requirements, preferences, and policies change frequently.

Each time an enterprise makes a change, that likely means that the data team must update its LLM alignment policies. That could mean that the group must start labeling data again from zero—which leads us to the data bottleneck.

The data bottleneck

Using manual approaches to create alignment datasets for bespoke enterprise deployments demands tedium and expensive subject matter expert time.

Worse, it is often the case that, enterprises cannot outsource this process. While crowd workers can be useful for general knowledge tasks, they may lack the expertise to accurately label specialized data. In a more subtle shortcoming, data labeling for generative AI alignment must reflect the preferences of an organization’s user base—something difficult or impossible to replicate in crowd labelers, well-trained as they may be.

Even when specialization doesn’t present a problem, company policy may bar enterprises from using outside data crowd workers due to data governance or privacy policies.

That means that data teams must work with SMEs each time the model needs realignment—which could be often.

Fortunately, we have developed scalable tools to ease this process.

Innovative AI alignment solutions at Snorkel AI

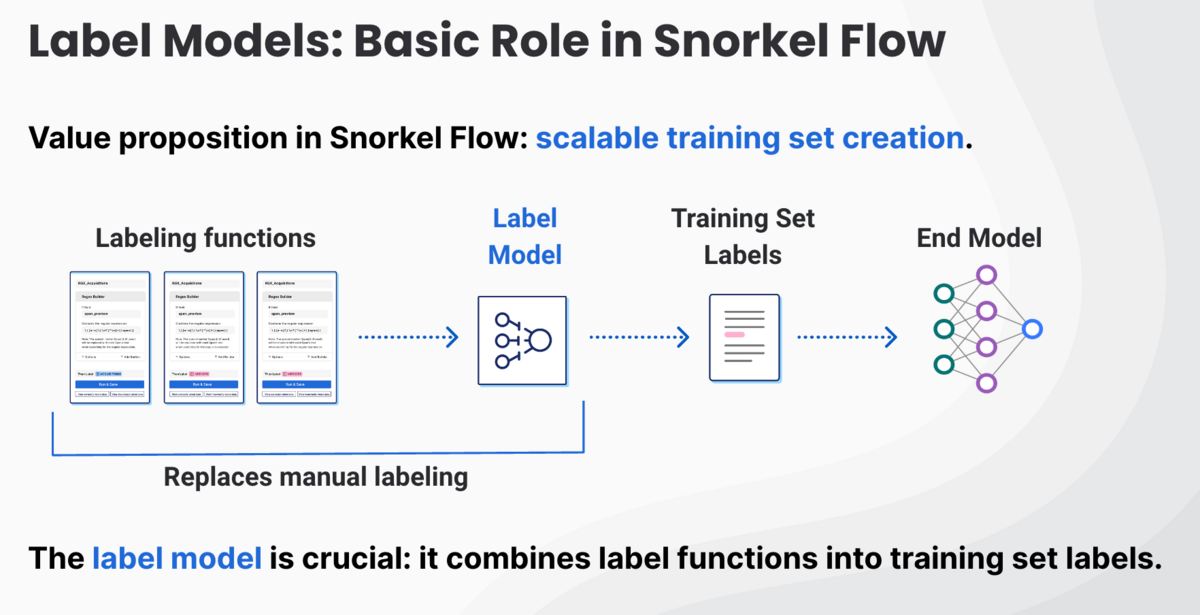

At Snorkel AI, we pioneer techniques to streamline the development of data, including alignment data. We call our approach programmatic labeling.

The process pairs SMEs and data scientists, who amplify each SME’s impact. The data scientists encode SME labeling logic into functions that suggest labels for data points at scale.

You can read more about programmatic labeling here.

The future of enterprise AI alignment

Achieving and maintaining enterprise alignment involves navigating a maze of complexities, from dynamic requirements to the creation of high-quality alignment data. At Snorkel AI, we’re committed to addressing these challenges through innovative programmatic approaches.

By ensuring that your AI systems are aligned not only technically but also ethically and strategically, enterprises can harness the full potential of AI while minimizing risks. The future of AI is not just about technological advancements but also about how these technologies can be ethically and effectively integrated into our business environments.

Learn More

Follow Snorkel AI on LinkedIn, Twitter, and YouTube to be the first to see new posts and videos!