With AI teams facing overwhelming demand for new generative AI use cases, Snorkel launches AI model accelerator program to address the biggest stumbling block: unstructured data

SAN FRANCISCO (June 27, 2024) — Snorkel AI today announced a multi-year Strategic Collaboration Agreement (SCA) with Amazon Web Services (AWS) to help enterprises build custom, production-ready artificial intelligence (AI) models. As part of the SCA, the companies have launched an accelerator program for Amazon SageMaker customers that is designed to deliver private fine-tuned generative AI models along with co-developed benchmarks that evaluate model performance against an organization’s unique goals and objectives.

Large Language Models (LLMs) are trained on massive public data sets, and their responses are developed off that training data. Most high-value enterprise AI applications need to provide answers that incorporate private business information while complying with organizational and regulatory guidelines. To deliver production-quality results, generic LLMs must be privately tuned using an enterprise’s carefully curated data.

Snorkel AI has spent the last decade pioneering the practice of AI data development and helps some of the world’s most sophisticated enterprises curate their data to build custom AI services. AWS provides a robust framework for responsible AI development with Amazon SageMaker, a fully managed service that brings together a broad set of tools to build, train, and deploy generative AI and machine learning (ML) models. The collaboration between AWS and Snorkel AI will make it easier and faster for enterprises to build AI applications tailored to their unique requirements.

“Generative AI quality is entirely dependent on the data used to tune and align models,” said Henry Ehrenberg, Snorkel AI co-founder. “Enterprises working on custom use cases need to quickly identify the best off-the-shelf model, then tune it with scalable and adaptable approaches to developing their data, and deploy these models with enterprise-grade security and privacy. Our relationship with AWS helps organizations around the globe accelerate the demo-to-production pipeline. This is fantastic news for our shared customers and the many AWS customers looking to transform their business with generative AI use cases.”

As part of the Snorkel and AWS Custom Model Accelerator Program, participants will work directly with Snorkel researchers and AWS experts and get early access to cutting-edge techniques from published and proprietary research. Program components include:

- Evaluation and benchmark workshops conducted collaboratively with Snorkel and AWS experts to create custom benchmarks, combining Snorkel’s experience in LLM evaluation and data operations with customers’ requirements and domain knowledge–leading to more insightful evaluations of LLM performance.

- Snorkel-led data & LLM development to support end-to-end delivery of LLMs that are fine-tuned and aligned using Amazon SageMaker JumpStart and other tools, like Amazon Bedrock in the future, to meet production-level performance.

- Model cost optimization and serving to optionally distill LLMs into specialized “small language models” (SLMs) that improve enterprise task-specific accuracy, while dramatically reducing cost.

- Exclusive access to a production-ready model optimized for a specific use case.

Learn more about what Snorkel can do for your organization

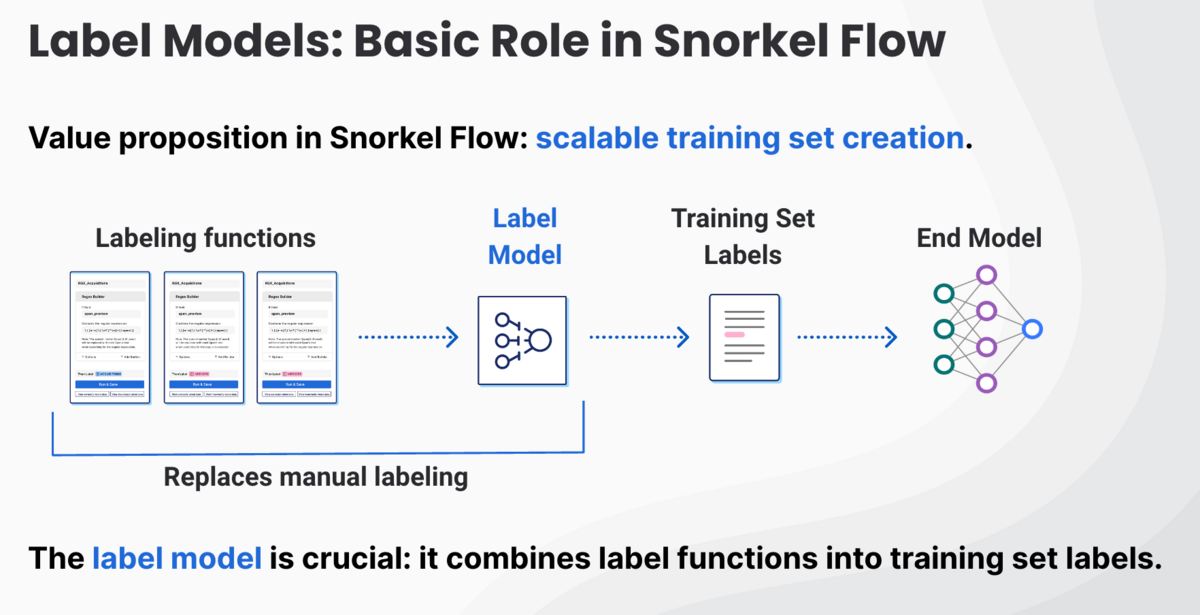

Snorkel AI offers multiple ways for enterprises to uplevel their AI capabilities. Our Snorkel Flow data development platform empowers enterprise data scientists and subject matter experts to build and deploy high quality models end-to-end in-house. Our Snorkel Custom program puts our world-class engineers and researchers to work on your most promising challenges to deliver data sets or fully-built LLM or generative AI applications, fast.

See what Snorkel option is right for you. Book a demo today.